DARPA's Cyber Grand Challenge took place this year right before DEFCON 24 and was a watershed moment for automated security analysis. All of the raw data from the competition has been out for awhile now, but I haven't seen anyone analyze it yet. So, I took a stab at it myself.

I was specifically interested in looking at how well teams actually performed. The fact is that the winning Cyber Reasoning System (CRS), ForAllSecure's Mayhem, didn't do anything for the last 30 or so rounds of the game due to Mayhem taking too long to receive data. So, I set out to try and answer what I felt was the most pressing question: Was the only winning move not to play?

A strange game @DARPACGC it seems the only winning move is not to play.

— Mayhem CRS (@MayhemCRS) August 5, 2016

Scoring Formula

To figure this out, we need to first understand exactly how the scores were calculated. This was described during the CGC stream, but they displayed two different, contradicting formulas (check out 20:35 and 22:35)! Since I clearly couldn't trust them, I wanted to find it in print. Turns out it's harder to find than you'd think.

It's not in the Finalist Event Information Sheet, it's not in the latest version of the CGC Rules (section 3.2.3 at least outlines some of the components of the scoring algorithm, though), it's not in the Event FAQ or the overall CGC FAQ, and I don't see it posted on either their website or GitHub. There is, however, a little graphic on the bottom of page 4 of the CFE Brochure that states the overall formula:

score = availability * security * evaluation * 100

...where score is the score for an individual challenge set (CS). Each of the scores (one per CS) is then added

together to calculate the final score for that round. Each of the scores for each round is added together to calculate

the final total score. Hilariously, all of this contradicts everything shown during the event.

How each of the component scores themselves are calculated is never explained. I'm guessing they kept the same overall framework from the CQE Scoring Document. The full explanation is sorta lengthy (see the document), but here's the gist:

- The

availabilitymetric ensures that the service is available (not disconnected or using too many resources) and functional (not missing required features). It is scored on a scale of 0 to 1. If your service isn't available at all, you receive no points for anything. If your service is perfectly available, you get 1 point. If there are problems, you receive something in-between (see formula in document). - The

securitymetric ensures that the service is not vulnerable. It is scored on a scale of 0 to 1. If your service is vulnerable to all reported proofs of vulnerability for that service, you receive no points. If your service is no longer vulnerable after patching you receive 1 point. If your service is partially vulnerable, you receive something in-between (see formula in document). - The

evaluationmetric grants bonus points for finding vulnerabilities. It is scored on a scale of 1 to 2. If you don't discover any vulnerabilities, you simply get 1 point. If you prove that a vulnerability exists, you get 2 points.

I believe there are only two marked differences between this explanation and what's published for the CFE

(aside from the CQE using challenge binaries and the CFE using challenge sets). The first is that the

security metric is now scored entirely based on PoVs from other teams and doesn't check if you've patched

vulnerabilities the organizers intended (it's also now scored between 1 and 2). The second is that the evaluation

metric is no longer simply 1 or 2 - it can be in-between if your PoV only works on some of the teams, rather than all

of them.

Assuming the above formulas and explanations are correct, we can say that, for each challenge set in a round, a CRS

doing everything right would receive 400 points (1 * 2 * 2 * 100 = 400). A CRS doing nothing, by contrast,

would receive either 100 points (1 * 1 * 1 * 100 = 100) if an exploit exists, or 200 points (1 * 2 * 1 * 100 = 200)

if no exploit exists. Multiplying each of these by the number of challenge sets in a given round will give us the

score for that round, and adding all those together will give us theoretical total scores.

UPDATE (2017-02-19): While I'd been waiting for confirmation from my employer that I was clear to post this

(since I had small involvement in our CGC effort prior to CQE), Shellphish

released a write-up of their CGC experiences in

Phrack. They confirmed everything I said above (and also provided some insights into why the availability score

was so difficult for teams during the competition). It's a great paper and I recommend reading it after this post if

you're interested in more information.

Scoring Data

Alright, so, let's move on to the actual scoring data. The data for each scoring round is provided as a

13GB tarball by DARPA. This tarball contains folders

named cfe-export-bundle-N-T where N appears to be the round number and T appears to be a timestamp in

epoch time. There are 96 folders in total, one for each round

(not 95, like some of these articles would have you expect -

the first round is round 0).

Inside each folder are two files: A blank file called flag and another tarball with the name

cfe-export-bundle-N (where N is the round number again). Inside the tarball are two folders:

files, which contains a number of.idsand.rcbfiles along with.zips namedcsid_X-round_N(where N is the round and I have no idea what X is)N(where N is the round number yet again), which contains acrs_data.csvand ascore_data.json.

I wasn't sure what to do with the stuff inside of files, so I started with the other folder. Each crs_data.csv file

contains a bunch of lines that look like this:

2016-08-04 15:56:02,lcp-red,lcp-cooling-power,8129.0

2016-08-04 15:56:02,lcp-red,lcp-water-temp-in,57.0

2016-08-04 15:56:02,lcp-red,lcp-water-temp-out,63.0

2016-08-04 15:56:02,lcp-red,lcp-water-valve,52.0

These appear to be the logs of what was happening with each system's hardware as the game was running. Makes sense, given that a hardware failure would obviously have ruined the game. I skipped over these since they didn't contain any scoring data.

The score_data.json files are really what we want to look at. They contain a huge dump of data from each scoring

round with the following top-level keys:

round- The round number.challenges- The IDs of the challenge binaries (CBs) scored during this round.csid_map- The full mapping of CB IDs to CB name for the entire competition.rank- The score for each team during this round.teams- A full dump of all actions from each team.

I un-packed all the files with this BASH one-liner (it's 37GB of data when decompressed, if you were wondering):

cd cgc-submissions; for d in *; do cd $d; tar xf *.tar; cd ..; done

I then used Ruby to load the JSON data and do some analysis. By inspecting the challenges data, we can see that every

round (with exceptions) had 15 challenge sets that were to be scored. The exceptions to this rule were:

- Round 0 - 10 challenge sets

- Round 1 - 13 challenge sets

- Round 12 - 14 challenge sets

- Round 92 - 14 challenge sets

- Round 93 - 13 challenge sets

- Round 94 - 12 challenge sets

- Round 95 - 11 challenge sets

By inspecting the rank key, we can see what each team's score was in a given round. The mapping of team ID to team

appears to be:

- Team 1 - Galactica

- Team 2 - Jima

- Team 3 - Rubeus

- Team 4 - Crspy

- Team 5 - Mayhem

- Team 6 - Mechaphish

- Team 7 - Xandra

At first, I thought this data was what each team scored that round. That doesn't make any sense when you look at all the data. Instead, it appears to be what each team's total score was as of that round. To get a complete list of scores per round, you have to subtract out the score from the previous round.

Now that we know how many challenge sets there were and what each team's score was per round, the last piece of data

we need is how many exploits existed in each round. For simplicity's sake, we'll assume that any successful

proof of vulnerability (PoV) against any other CRS for a particular challenge set would work against a CRS doing

nothing. To get this data, we need to loop through each team's pov_results. A successful PoV will be marked with a

result of success (unsuccessful PoVs say failure).

Final Result

I implemented everything above in this Ruby script:

#!/usr/bin/env ruby

require "json"

scores = []

challenges = []

Dir["cfe-submissions/*"].each do |dir|

File.open("#{dir}/#{dir.split("-")[4]}/score_data.json", "r") do |f|

round = []

json = JSON.load(f.read())

i = json["round"]

# get challenges

challenges[i] = {}

json["challenges"].each do |cs|

challenges[i][cs] = false

end

# get scores

json["rank"].each do |rank|

round[rank["team"]-1] = rank["score"]

end

scores[i] = round

# get successful exploits

json["teams"].each do |team, data|

data["pov_results"].each do |_, pov|

if pov.keys.include?("result") and pov["result"] == "success"

challenges[i][pov["csid"]] = true

end

end

end

end

end

last = nil

wopr = []

perfect = []

puts "| Round | Galactica | Jima | Rubeus | Crspy | Mayhem | Mechaphish | Xandra | WOPR | Perfection |"

puts "| -------------------------------------------------------------------------------------------- |"

scores.each_with_index do |round, i|

this = round.dup

this.length.times { |j| this[j] -= last[j] } if last

wopr[i] = 0

perfect[i] = 0

challenges[i].each do |id, pwned|

if pwned then wopr[i] += 100 else wopr[i] += 200 end

perfect[i] += 400

end

puts "| #{i} | #{this[0]} | #{this[1]} | #{this[2]} | #{this[3]} | #{this[4]} | #{this[5]} | #{this[6]} | #{wopr[i]} | #{perfect[i]} |"

last = round

end

puts "| TOTAL | #{last[0]} | #{last[1]} | #{last[2]} | #{last[3]} | #{last[4]} | #{last[5]} | #{last[6]} | #{wopr.reduce(0, :+)} | #{perfect.reduce(0, :+)} |"

If you run it, it'll give you the following table (the "WOPR" column is our do-nothing CRS, while the "Perfection" column shows the theoretical maximum score for that round):

| Round | Galactica | Jima | Rubeus | Crspy | Mayhem | Mechaphish | Xandra | WOPR | Perfection |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 2000 | 2000 | 2000 | 2000 | 2000 | 2000 | 2000 | 2000 | 4000 |

| 1 | 2579 | 2583 | 2600 | 2577 | 2581 | 2575 | 2583 | 2600 | 5200 |

| 2 | 2479 | 2091 | 2900 | 1650 | 2631 | 850 | 1700 | 2600 | 5600 |

| 3 | 2492 | 2617 | 2333 | 2119 | 2733 | 1742 | 2353 | 2600 | 6000 |

| 4 | 2196 | 2621 | 2535 | 2275 | 2242 | 2557 | 2357 | 2600 | 6000 |

| 5 | 2273 | 2617 | 2714 | 2266 | 2765 | 2173 | 2415 | 2600 | 6000 |

| 6 | 2279 | 2431 | 2466 | 2373 | 2556 | 2982 | 2781 | 2600 | 6000 |

| 7 | 2460 | 2480 | 2662 | 2162 | 3003 | 2979 | 2792 | 2600 | 6000 |

| 8 | 2433 | 2298 | 2258 | 2104 | 2998 | 2984 | 2583 | 2600 | 6000 |

| 9 | 1994 | 2232 | 2219 | 2324 | 3099 | 2979 | 2637 | 2500 | 6000 |

| 10 | 2344 | 2226 | 2623 | 2527 | 3066 | 2989 | 2948 | 2500 | 6000 |

| 11 | 2259 | 2120 | 2739 | 2515 | 2863 | 2979 | 2948 | 2400 | 6000 |

| 12 | 2372 | 2228 | 3058 | 2516 | 3065 | 2992 | 2959 | 2500 | 6000 |

| 13 | 2339 | 2217 | 3052 | 2512 | 3053 | 2979 | 2949 | 2500 | 6000 |

| 14 | 2148 | 2216 | 3089 | 2521 | 3065 | 2979 | 2953 | 2500 | 6000 |

| 15 | 2418 | 2135 | 3087 | 2534 | 3128 | 2986 | 2952 | 2400 | 6000 |

| 16 | 2718 | 2220 | 3095 | 2526 | 3063 | 2980 | 2956 | 2500 | 6000 |

| 17 | 2431 | 2131 | 3103 | 2537 | 3126 | 2779 | 2951 | 2400 | 6000 |

| 18 | 2412 | 2109 | 2937 | 2395 | 3104 | 2788 | 2742 | 2400 | 6000 |

| 19 | 2649 | 2119 | 2938 | 2387 | 3120 | 2787 | 2973 | 2400 | 6000 |

| 20 | 2310 | 2123 | 2643 | 2413 | 3118 | 2987 | 2971 | 2400 | 6000 |

| 21 | 2388 | 2363 | 2706 | 2672 | 3104 | 2699 | 2975 | 2400 | 6000 |

| 22 | 2190 | 2264 | 2571 | 2552 | 3219 | 2399 | 2879 | 2400 | 6000 |

| 23 | 2115 | 1967 | 2409 | 2331 | 2832 | 2428 | 2677 | 2300 | 6000 |

| 24 | 2133 | 2318 | 2142 | 2661 | 2964 | 2536 | 2624 | 2500 | 6000 |

| 25 | 2096 | 2359 | 2193 | 2733 | 2999 | 2388 | 2389 | 2600 | 6000 |

| 26 | 2429 | 2257 | 1687 | 2831 | 2963 | 2560 | 2588 | 2700 | 6000 |

| 27 | 2194 | 2503 | 744 | 2104 | 2925 | 2898 | 2323 | 2800 | 6000 |

| 28 | 2101 | 2600 | 1002 | 2564 | 2901 | 2605 | 2544 | 2800 | 6000 |

| 29 | 2404 | 2594 | 1353 | 2404 | 2915 | 2816 | 2741 | 2800 | 6000 |

| 30 | 2363 | 2621 | 2281 | 2430 | 2879 | 2627 | 2546 | 2900 | 6000 |

| 31 | 2373 | 2610 | 2281 | 2405 | 2871 | 2590 | 2557 | 2900 | 6000 |

| 32 | 2602 | 2420 | 2046 | 2591 | 2885 | 2978 | 2560 | 2900 | 6000 |

| 33 | 2415 | 2459 | 2628 | 2307 | 2823 | 2833 | 2597 | 2800 | 6000 |

| 34 | 2977 | 2468 | 2826 | 2252 | 2913 | 2685 | 2782 | 2800 | 6000 |

| 35 | 2798 | 2464 | 2629 | 2682 | 2956 | 2944 | 2740 | 2800 | 6000 |

| 36 | 2863 | 2631 | 2766 | 2581 | 2800 | 2612 | 2588 | 2900 | 6000 |

| 37 | 2937 | 2733 | 2769 | 2466 | 2999 | 2833 | 2885 | 2900 | 6000 |

| 38 | 2798 | 2799 | 2974 | 2644 | 3000 | 2800 | 2912 | 3000 | 6000 |

| 39 | 2995 | 2800 | 2972 | 2759 | 3000 | 2792 | 2914 | 3000 | 6000 |

| 40 | 2894 | 2900 | 2698 | 2764 | 2897 | 1048 | 2898 | 2900 | 6000 |

| 41 | 2898 | 2898 | 2874 | 2573 | 2900 | 1160 | 2696 | 2900 | 6000 |

| 42 | 2696 | 2900 | 2897 | 2774 | 2900 | 1600 | 2898 | 2900 | 6000 |

| 43 | 2851 | 2890 | 2897 | 2767 | 2896 | 2597 | 2889 | 2900 | 6000 |

| 44 | 2853 | 2897 | 2900 | 2599 | 2894 | 2931 | 2896 | 2900 | 6000 |

| 45 | 2857 | 2900 | 2900 | 2785 | 2900 | 2933 | 2898 | 2900 | 6000 |

| 46 | 2872 | 2899 | 2800 | 2092 | 2800 | 3069 | 2790 | 2800 | 6000 |

| 47 | 2564 | 2600 | 2800 | 2568 | 2800 | 3301 | 2397 | 2800 | 6000 |

| 48 | 2825 | 2749 | 2900 | 2570 | 2900 | 3120 | 2899 | 2900 | 6000 |

| 49 | 2786 | 2748 | 2900 | 2534 | 2800 | 2716 | 2885 | 2900 | 6000 |

| 50 | 2581 | 2748 | 2900 | 2834 | 2892 | 2830 | 2889 | 2900 | 6000 |

| 51 | 3068 | 2747 | 2900 | 2643 | 2891 | 2339 | 2698 | 2900 | 6000 |

| 52 | 2987 | 2744 | 2800 | 2749 | 2894 | 2738 | 2776 | 2900 | 6000 |

| 53 | 3006 | 2746 | 2998 | 2784 | 2900 | 2933 | 2974 | 2900 | 6000 |

| 54 | 3043 | 2743 | 2899 | 2769 | 2894 | 2541 | 2766 | 2900 | 6000 |

| 55 | 3030 | 2748 | 2800 | 2589 | 2900 | 2611 | 2986 | 2900 | 6000 |

| 56 | 3006 | 2735 | 2998 | 2536 | 2880 | 2787 | 2970 | 2900 | 6000 |

| 57 | 2817 | 2637 | 2898 | 2479 | 2788 | 2491 | 2774 | 2800 | 6000 |

| 58 | 2566 | 2693 | 2798 | 2661 | 2698 | 2698 | 2766 | 2700 | 6000 |

| 59 | 2629 | 2696 | 2698 | 2484 | 2696 | 2864 | 2548 | 2700 | 6000 |

| 60 | 2494 | 2685 | 2598 | 2363 | 2691 | 2797 | 2622 | 2700 | 6000 |

| 61 | 2645 | 2684 | 2798 | 2359 | 2678 | 2553 | 2782 | 2700 | 6000 |

| 62 | 2661 | 2679 | 2798 | 2362 | 2689 | 2751 | 2781 | 2700 | 6000 |

| 63 | 2571 | 2796 | 2900 | 2531 | 2786 | 2661 | 2823 | 2800 | 6000 |

| 64 | 2085 | 2599 | 2700 | 2267 | 2592 | 2666 | 2648 | 2600 | 6000 |

| 65 | 2742 | 2695 | 2800 | 2575 | 2692 | 2851 | 2556 | 2700 | 6000 |

| 66 | 2604 | 2597 | 2500 | 2257 | 2693 | 2855 | 2458 | 2700 | 6000 |

| 67 | 2620 | 2899 | 2700 | 2462 | 2796 | 2789 | 2636 | 2800 | 6000 |

| 68 | 2608 | 2891 | 2703 | 2578 | 2791 | 2579 | 2825 | 2800 | 6000 |

| 69 | 2811 | 2887 | 2701 | 2535 | 2794 | 2782 | 2833 | 2800 | 6000 |

| 70 | 2605 | 2875 | 2698 | 2572 | 2793 | 2982 | 2821 | 2800 | 6000 |

| 71 | 2622 | 2995 | 2800 | 2597 | 2900 | 2400 | 2841 | 2900 | 6000 |

| 72 | 2619 | 2992 | 2803 | 2469 | 2900 | 2956 | 2978 | 2900 | 6000 |

| 73 | 2726 | 2794 | 2203 | 1729 | 2700 | 2663 | 2877 | 2700 | 6000 |

| 74 | 2500 | 2800 | 2600 | 2124 | 2800 | 2231 | 2779 | 2800 | 6000 |

| 75 | 2533 | 2700 | 2600 | 1953 | 2800 | 2702 | 2979 | 2800 | 6000 |

| 76 | 2582 | 2762 | 2700 | 2362 | 2800 | 2736 | 2786 | 2800 | 6000 |

| 77 | 2588 | 2768 | 2700 | 2171 | 2800 | 2637 | 2791 | 2800 | 6000 |

| 78 | 2900 | 2900 | 2800 | 2630 | 2900 | 2772 | 2800 | 2900 | 6000 |

| 79 | 2900 | 2800 | 2700 | 2114 | 2800 | 2671 | 2999 | 2800 | 6000 |

| 80 | 2700 | 2800 | 2700 | 2538 | 2800 | 2672 | 2800 | 2800 | 6000 |

| 81 | 2518 | 2600 | 2500 | 2494 | 2700 | 2200 | 2518 | 2700 | 6000 |

| 82 | 2517 | 2613 | 2505 | 2386 | 2700 | 2800 | 2419 | 2700 | 6000 |

| 83 | 2620 | 2615 | 2506 | 2190 | 2700 | 2933 | 2569 | 2700 | 6000 |

| 84 | 2543 | 2612 | 2573 | 2421 | 2700 | 2533 | 2939 | 2700 | 6000 |

| 85 | 2509 | 2611 | 2422 | 2420 | 2700 | 2900 | 2931 | 2700 | 6000 |

| 86 | 2433 | 2612 | 2557 | 2388 | 2700 | 2333 | 2724 | 2700 | 6000 |

| 87 | 2833 | 2611 | 2706 | 2609 | 2700 | 2461 | 2933 | 2700 | 6000 |

| 88 | 2833 | 2613 | 2700 | 2602 | 2700 | 2449 | 2724 | 2700 | 6000 |

| 89 | 2832 | 2613 | 2716 | 2621 | 2700 | 2533 | 2939 | 2700 | 6000 |

| 90 | 2866 | 2614 | 2771 | 2666 | 2700 | 2933 | 2953 | 2700 | 6000 |

| 91 | 2866 | 2612 | 2769 | 2678 | 2700 | 3032 | 2956 | 2700 | 6000 |

| 92 | 2666 | 2413 | 2533 | 2478 | 2500 | 2866 | 2750 | 2500 | 5600 |

| 93 | 2482 | 2213 | 2499 | 2503 | 2300 | 2682 | 2550 | 2300 | 5200 |

| 94 | 2266 | 2012 | 2133 | 2377 | 2100 | 2466 | 2145 | 2100 | 4800 |

| 95 | 2049 | 1813 | 2082 | 2111 | 1800 | 2249 | 1954 | 1900 | 4400 |

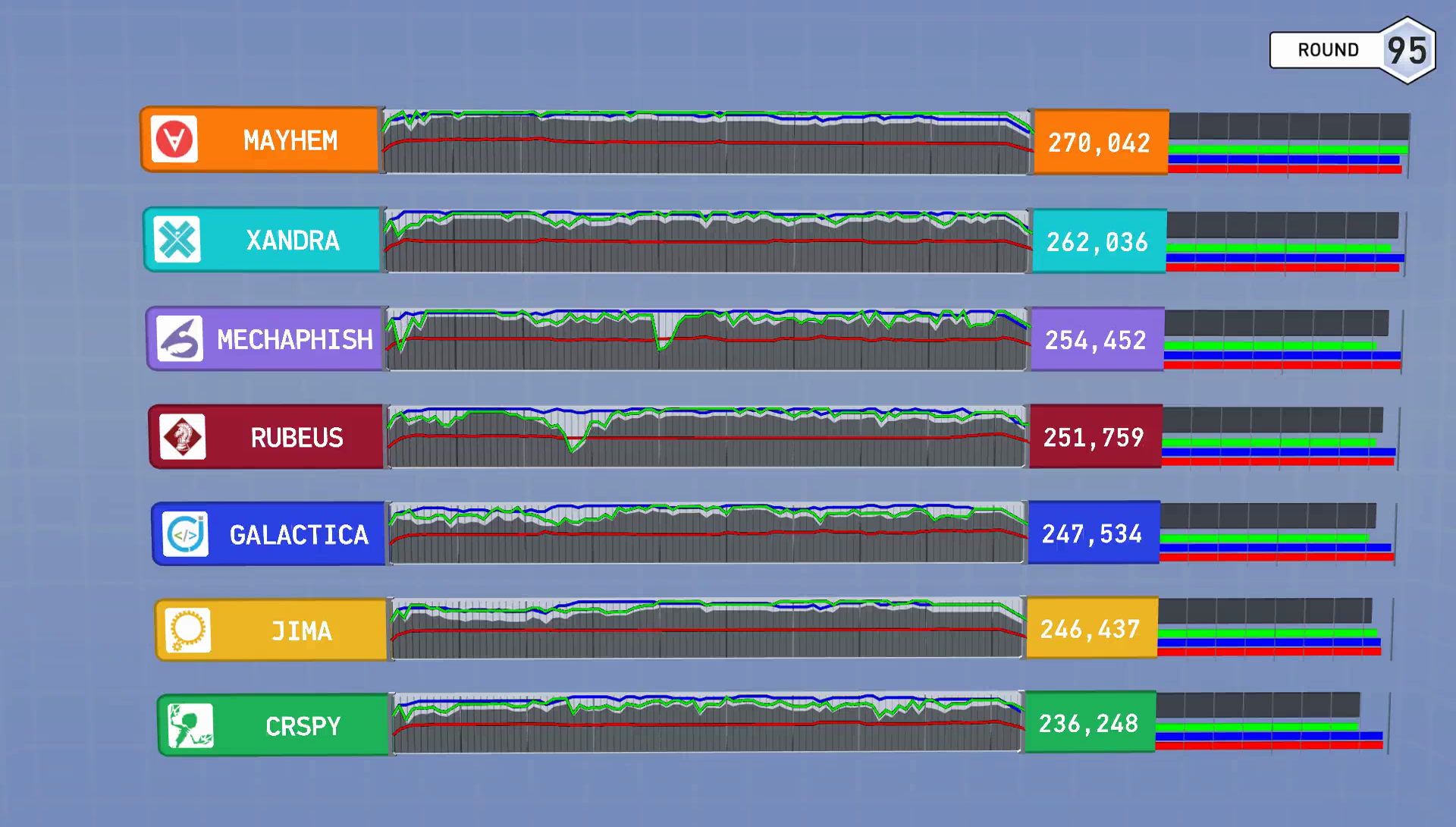

| TOTAL | 247534 | 246437 | 251759 | 236248 | 270042 | 254452 | 262036 | 258500 | 568800 |

Yes. You are reading that correctly. A CRS that literally did nothing could have placed 3rd. Setting up absolutely no software in the CFE could have won you $750,000. It would seem that, while not playing wasn't a winning move, it could still have been worth some serious money.

I do have to mention two caveats, though:

- Although I tried to figure out what the scoring algorithm was, I haven't been able to completely audit all the scores for the entire event. All the data above simply trusts that each round was scored appropriately by the organizers.

- I believe it's possible that a given CRS may have submitted a PoV after all other CRSs had patched. As a result, there may be PoVs that didn't work on anyone (and, thus, weren't scored), but that would have worked on a system doing absolutely no patching. One could probably determine definitively if this is or isn't the case by taking all submitted PoVs and re-throwing them. Unfortunately, that would take a fair amount of effort and I don't have time for it right now.

Regardless, I think it's still pretty obvious just how far these systems have to go before they're taking my job or making the world a safer place.

Design Thoughts

To wrap up, I thought a little bit about the design of the competition and how this might be avoided. I think the problem here is that, unlike the CQE, there was no "oracle" whose score counted. The CQE had teams losing points if any of the "known" vulnerabilities weren't patched in addition to losing points for vulnerabilities other teams uncovered. Here, the only way to lose points is if other teams find vulnerabilities.

Given that the stated purpose of CGC was to "create automatic defensive systems capable of reasoning about flaws, formulating patches, and deploying them on a network in real time", this might seem odd. How can you appropriately test if a system was capable of these things if you don't at least score them on flaws you already know about?

I think the answer lies in what the CGC organizers intended the competition to be about. We already have generic mitigations. CGC was about building systems that could, in the most ideal case, fix every flaw with a targeted patch instead. In order to develop those targeted patches, you have to find and understand the vulnerability. Demonstrating true understanding requires not only a targeted patch that works, but also a proof of that vulnerability. Not having an oracle means the only teams that consistently score are those with both a patch and a proof.

I still feel the design of the game was solid. The fact that a do-nothing system would have scored well should speak to the difficulty of the problem space and the incompleteness of each system. Not poor game design. I also have a feeling that teams didn't place as much emphasis on offense and availability (where it seems most teams missed or lost points) given the uncertainty about the CFE rules.

I'm not sure if there will be another Cyber Grand Challenge. If there is, I would expect to see this become less and less of a problem as time goes on and systems improve.